As a tech enthusiast and DIY advocate, I’m always looking for ways to optimize my workflow and explore new technologies. Recently, I embarked on an exciting project: setting up my own Virtual Private Server (VPS) to host OpenWebUI and other AI tools. This move has not only proven to be cost-effective but has also opened up a world of possibilities for customization and experimentation. Let me walk you through my journey and the setup process.

Why I Chose to Self-Host

The decision to move away from subscription-based AI services to a self-hosted solution was driven by several factors:

- Cost-effectiveness: While subscription services are convenient, they can quickly become expensive, especially when using multiple AI models.

- Flexibility: Self-hosting allows me to use and combine various AI models from providers like DeepInfra, OpenAI, Claude, Gemini. Perplexity.

- Customization: I can tailor the setup to my specific needs and experiment with different configurations.

- Learning opportunity: The process of setting up and managing a VPS is an invaluable learning experience.

My VPS Setup

I opted for the KVM 4 plan from Hostinger, which admittedly is overkill for just running OpenWebUI. A KVM 1 plan would suffice for that purpose alone. However, I had bigger plans in mind. I wanted to run additional applications like Flowise, Dify, Wallos, and Code Server, so the extra resources have come in handy.

Domain and Reverse Proxy

I already had a domain from Bluehost, which I’ve put to good use for this project. To manage the reverse proxy and ensure smooth routing to the various applications, I’m using CloudPanel. This setup allows me to easily manage multiple subdomains and SSL certificates for each application.

Security Measures

Security is a top priority when self-hosting, especially when dealing with AI models that may process sensitive data. Here are some of the measures I’ve implemented:

- Tailscale: I’m using Tailscale to securely access my VPS. All other ports have been blocked to prevent unauthorized access from the internet.

- Localhost binding: All applications are bound to 127.0.0.1:PORT, ensuring they’re only accessible on the primary network card and not through other network interfaces.

- Google Authentication: For an additional layer of security, I’ve implemented Google Authentication for accessing OpenWebUI.

The Setup Process

Setting up the VPS and deploying OpenWebUI involved several steps:

- Provisioning the VPS with Hostinger

- Selected Ubuntu with CloudPanel for easy nginx proxy management

- Chose the KVM 2 VPS plan for better performance and flexibility

- Configuring the domain DNS settings

- Added subdomains in Bluehost

- Updated the VPS IP address for the domains

- Installing and configuring CloudPanel for reverse proxy management

- Used CloudPanel for easy proxy management with nginx

- Set up reverse proxy and implemented Let’s Encrypt SSL certification for various Docker-hosted apps2

- Deploying Docker containers for OpenWebUI and other applications

- Installed CasaOS for improved Docker and app management

- Deployed OpenWebUI from the BigBearCasaOS repository

- Customized Docker containers and shared them on GitHub for easy access

- Setting up Tailscale for secure remote access

- Implemented Tailscale on Docker using the auth key mechanism

- Blocked all other ports to prevent unauthorized access from the internet

- Configuring Google Authentication for OpenWebUI

- Implemented Google Auth for apps with built-in support

- Set up Google Cloud client ID and secret

- Used OAuth2 plugin for nginx where native Google Auth wasn’t available

- Integrating various AI models (DeepInfra, OpenAI, Claude, Gemini) with OpenWebUI

- Configured OpenWebUI to work with multiple AI models for increased flexibility and capabilities

This setup provides a robust, secure, and flexible environment for hosting and managing various AI applications on the VPS

Beyond OpenWebUI: A Hub of AI Tools

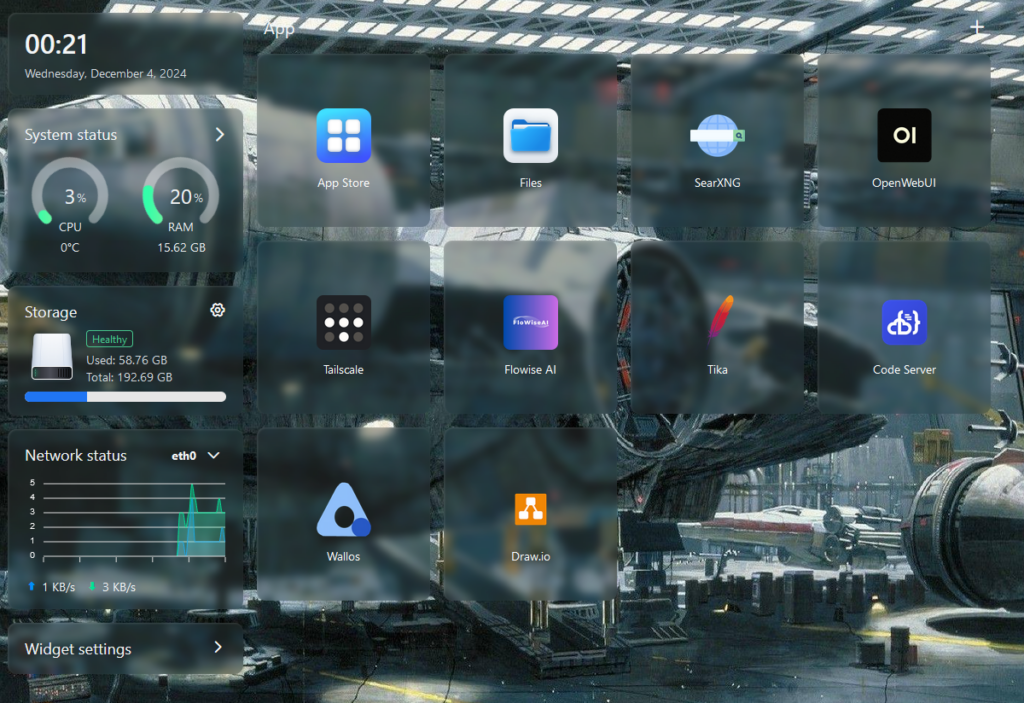

With the VPS set up, I’ve been able to deploy and experiment with a variety of AI tools and applications. Here’s a glimpse of what I’m currently running:

Each of these tools serves a specific purpose in my workflow, from AI-powered chatbots to workflow automation and code development.

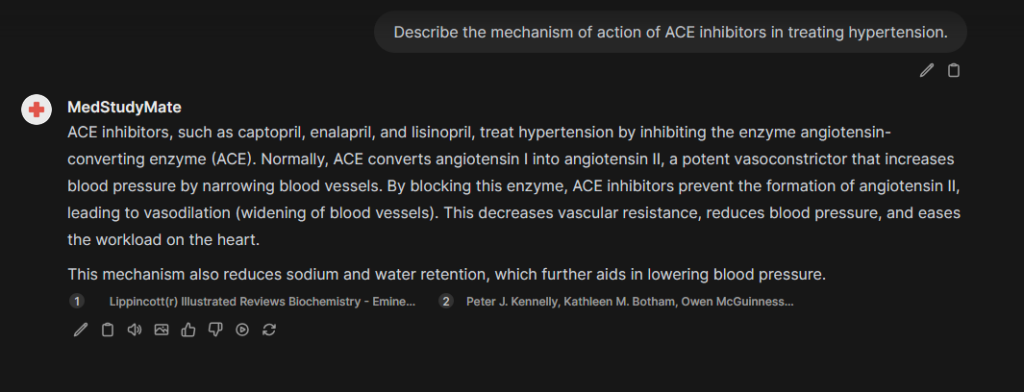

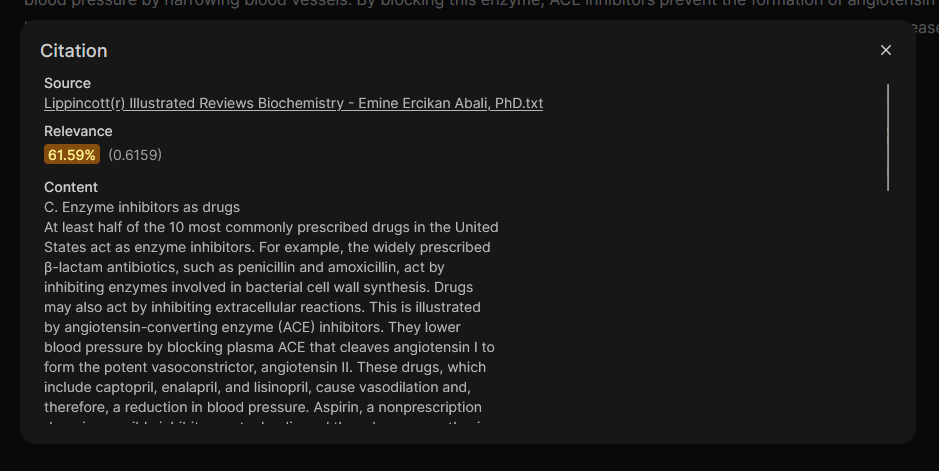

MedStudyMate: A Custom AI Study Companion

One of the most exciting projects I’ve been able to realize with this setup is MedStudyMate, a custom study model I created for my sister. This AI-powered tool has knowledge of all her course books and helps her study more effectively. It’s a perfect example of how self-hosting AI can lead to personalized solutions that wouldn’t be possible with off-the-shelf products.

The Benefits of Self-Hosting AI

Since making the switch to self-hosted AI solutions, I’ve experienced numerous benefits:

- Reduced costs: Despite the initial investment in VPS hosting, I’m saving money in the long run compared to multiple AI subscriptions.

- Increased privacy: By controlling the infrastructure, I have more control over data privacy and security.

- Customization: I can fine-tune models and create custom solutions like MedStudyMate.

- Experimentation: The ability to easily deploy and test different AI tools has accelerated my learning and innovation.

Conclusion

While setting up a self-hosted AI infrastructure requires some technical know-how and initial effort, the benefits far outweigh the challenges. Not only have I created a more cost-effective solution, but I’ve also gained valuable skills and the freedom to experiment with AI in ways I never could before.

If you’re considering a similar setup, I encourage you to take the plunge. The world of self-hosted AI is exciting, rewarding, and full of possibilities. Who knows what amazing projects you might create?

Remember, the journey of a thousand miles begins with a single step – or in this case, a single VPS instance. Happy hosting!

Comments